Face detection#

Although Medusa’s main focus is 3D/4D reconstruction, it also contains functionality for face detection, facial landmark prediction, and cropping as these steps often need to be performed before feeding images into reconstruction models.

In this tutorial, we will demonstrate Medusa’s face detection functionality.

Detection on (single) images#

The first step in many face analysis pipelines is face detection. Medusa contains two classes that perform face detection:

SCRFDetector: a detection model based on InsightFace’s SCRFD modelcitep{};YunetDetector: a detection model implemented in OpenCV

We recommend using the SCRFDetector as our experience is that it is substantially more accurate than YunetDetector (albeit a bit slower when run on CPU); if you want to use the YunetDetector, make sure to install OpenCV first (pip install python-opencv). So we’ll use the SCRFDetector for the rest of this section.

from medusa.detect import SCRFDetector

Under the hood, SCRFDetector uses an ONNX model provided by InsightFace, but our implementation is quite a bit faster than the original InsightFace implementation as ours uses PyTorch throughout (rather than a mix of PyTorch and numpy).

The SCRFDetector takes the following inputs upon initialization:

det_size: size to resize images to before passing to the detection model;det_threshold: minimum detection threshold (float between 0-1)nms_threshold: non-maximum suppression threshold (boxes overlapping more than this proportion are removed)device: either “cpu” or “cuda” (determined automatically by default)

The most important arguments are det_size and det_threshold; a higher det_size (a tuple with two integers, width x height) leads to potentially more accurate detections but slower processing; increasing det_threshold leads to more conservative detections (fewer false alarms, but more misses) and vice versa.

In our experience, the defaults are fine for most images/videos:

detector = SCRFDetector()

Now let’s apply it to some example data. We’ll use a single frame from out example video:

from medusa.data import get_example_image

img = get_example_image(load=True)

Here, img represents is loaded as a PyTorch tensor, but the detectors in Medusa can deal with paths to images or numpy arrays, too. Now, to process this image with the detector, we’ll call the detector object as if it is a function (which internally triggers the __call__ method):

det = detector(img)

The output of the detector call, det, contains a dictionary with information:

det.keys()

dict_keys(['conf', 'bbox', 'lms', 'img_idx', 'n_img'])

Notably, all values of the dictionary are PyTorch tensors. The most important keys are:

conf: the confidence of each detection (0-1)lms: a set of five landmark coordinates per detectionbbox: a bounding box per detection

Let’s take a look at conf:

conf = det['conf']

print(f"Conf: {conf.item():.3f}, shape: {tuple(conf.shape)}")

Conf: 0.884, shape: (1,)

So for this image, there is only one detection with a confidence of 0.884. Note that there may be more than one detection per image when there are more faces in the image!

Now, let’s also take a look at the bounding box for the detection:

det['bbox']

tensor([[187.4764, 20.0962, 414.5220, 337.8104]])

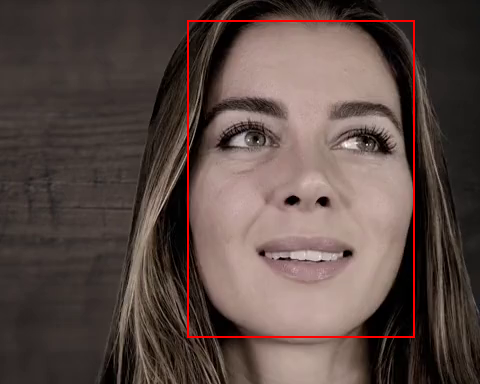

The bounding box contains four values (in pixel units) that represent the box’ mimimum x-value, minimum y-value, maximum x-value, and maximum y-value (in that order). We can in fact visualize this bounding box quite straightforwardly using torchvision:

import torch

from IPython.display import Image

from torchvision.utils import draw_bounding_boxes, save_image

# Note that `draw_bounding_boxes` expects the img to be in C x H x W format and uint8,

# so squeeze out batch dimension and convert float32 to uint8

red = (255, 0, 0)

img = img.squeeze(0).to(torch.uint8)

img_draw = draw_bounding_boxes(img, det['bbox'], colors=red, width=2)

# Save image to disk and display in notebook

save_image(img_draw.float(), './viz/bbox.png', normalize=True)

Image('./viz/bbox.png')

Looks like a proper bounding box! Now, let’s finally look at the predicted facial landmarks:

det['lms'] # B x 5 x 2

tensor([[[249.6934, 138.3164],

[364.9851, 140.5700],

[311.6084, 185.3770],

[256.7564, 250.2903],

[357.6646, 251.7064]]])

As you can see, each detection also comes with 5 landmarks consisting of two values (one for X, one for Y) in pixel units. As we’ll show below (again, using torchvision), these landmarks refer to the left eye, right eye, tip of the nose, left mouthcorner, and right mouth corner:

from torchvision.utils import draw_keypoints

# Note that `draw_keypoints` also expects the img to be in C x H x W format

img_draw = draw_keypoints(img, det['lms'], colors=red, radius=4)

# Save image to disk and display in notebook

save_image(img_draw.float(), './viz/lms.png', normalize=True)

Image('./viz/lms.png')

Detection on batches of images#

Thus far, we only applied face detection to a single image, but Medusa’s face detectors also work on batches of images such that it can be easily used to process video data, which gives us a good excuse to showcase Medusa’s powerful BatchResults class (explained later).

Let’s try this out on our example video, which we load in batches using Medusa’s VideoLoader:

from medusa.data import get_example_video

from medusa.io import VideoLoader

vid = get_example_video()

loader = VideoLoader(vid, batch_size=64)

# The loader can be used as an iterator (e.g. in a for loop), but here we only

# load in a single batch; note that we always need to move the data to the desired

# device (CPU or GPU)

batch = next(iter(loader))

batch = batch.to(loader.device)

# B (batch size) x C (channels) x H (height) x W (width)

print(batch.shape)

torch.Size([64, 3, 384, 480])

Initialize the detector as usual and call it on the batch of images like we did on a single image:

detector = SCRFDetector()

out = detector(batch)

print(out.keys())

print(out['bbox'].shape)

dict_keys(['conf', 'bbox', 'lms', 'img_idx', 'n_img'])

torch.Size([64, 4])

To visualize the detection results of this batch of images, we could write a for-loop and use torchvision to create for each face/detection and image with the bounding box and face landmarks, but Medusa has a specialized class for this type of batch data to make aggregation and visualization easier:

from medusa.containers import BatchResults

from IPython.display import Video

# `BatchResults` takes any output from a detector model (or crop model) ...

results = BatchResults(**out)

# ... which it'll then visualize as a video (or, if video=False, a set of images)

results.visualize('./viz/test.mp4', batch, video=True, fps=loader._metadata['fps'])

# Embed in notebook

Video('./viz/test.mp4', embed=True)

The BatchResults class is especially useful when dealing with multiple batches of images (which will be the case for most videos). When dealing with multiple batches, initialize an “empty” BatchResults object before any processing, and then in each iteration call its add method with the results from the detector.

Here, we show an example for three consecutive batches; note that BatchResults will store anything you give it, so here we’re also giving it the raw images (using the images=batch):

loader = VideoLoader(get_example_video(n_faces=2))

results = BatchResults()

for i, batch in enumerate(loader):

batch = batch.to(loader.device)

out = detector(batch)

results.add(images=batch, **out)

if i == 2:

break

Right now, the results object contains for each detection attribute (like lms, conf, bbox, etc) a list with one value for each batch:

# List of length 3 (batches), with each 64 values (batch size)

results.conf

[tensor([0.8863, 0.8227, 0.8861, 0.8192, 0.8868, 0.8186, 0.8862, 0.8176, 0.8880,

0.8181, 0.8861, 0.8167, 0.8916, 0.8136, 0.8912, 0.8116, 0.8836, 0.8031,

0.8835, 0.8020, 0.8846, 0.7994, 0.8844, 0.7998, 0.8855, 0.8021, 0.8853,

0.8025, 0.8805, 0.8020, 0.8806, 0.8002, 0.8801, 0.8010, 0.8799, 0.8009,

0.8747, 0.8016, 0.8753, 0.8002, 0.8735, 0.8013, 0.8739, 0.8018, 0.8739,

0.8019, 0.8734, 0.8025, 0.8759, 0.8029, 0.8760, 0.8018, 0.8723, 0.7966,

0.8719, 0.7967, 0.8609, 0.7933, 0.8607, 0.7927, 0.8756, 0.7921, 0.8756,

0.7925]),

tensor([0.8586, 0.7972, 0.8593, 0.7984, 0.8390, 0.7968, 0.8380, 0.7957, 0.8365,

0.7893, 0.8371, 0.7910, 0.8280, 0.7969, 0.8278, 0.7969, 0.8298, 0.8070,

0.8301, 0.8071, 0.8353, 0.8162, 0.8352, 0.8153, 0.8399, 0.8205, 0.8388,

0.8204, 0.8447, 0.8217, 0.8450, 0.8211, 0.8561, 0.8195, 0.8567, 0.8192,

0.8674, 0.8237, 0.8675, 0.8243, 0.8716, 0.8239, 0.8715, 0.8262, 0.8800,

0.8211, 0.8810, 0.8223, 0.8831, 0.8213, 0.8829, 0.8219, 0.8729, 0.8212,

0.8727, 0.8211, 0.8781, 0.8181, 0.8780, 0.8178, 0.8812, 0.8119, 0.8814,

0.8126]),

tensor([0.8858, 0.8072, 0.8858, 0.8073, 0.8836, 0.8024, 0.8833, 0.8031, 0.8827,

0.8030, 0.8831, 0.8024, 0.8817, 0.8068, 0.8811, 0.8076, 0.8791, 0.8047,

0.8794, 0.8051, 0.8799, 0.8037, 0.8802, 0.8012, 0.8756, 0.7987, 0.8749,

0.7983, 0.8689, 0.7947, 0.8681, 0.7958, 0.8649, 0.7904, 0.8637, 0.7909,

0.8618, 0.7898, 0.8638, 0.7873, 0.8662, 0.7894, 0.8657, 0.7893, 0.8685,

0.7920, 0.8700, 0.7913, 0.8755, 0.7958, 0.8741, 0.7958, 0.8789, 0.7920,

0.8794, 0.7914, 0.8775, 0.7923, 0.8768, 0.7917, 0.8781, 0.7949, 0.8772,

0.7940])]

We can concatenate everything by calling the object’s concat method:

results.concat()

results.conf.shape # 192 (= 3 * 64)

torch.Size([192])

Now, we can visualize the results as before (note that we give it the raw images as well):

results.visualize('./viz/test.mp4', results.images, video=True, fps=loader._metadata['fps'])

Video('./viz/test.mp4', embed=True)